Understanding systems

thinking before writing

When faced with a bug or feature request, there’s often pressure to start coding immediately. We’ve found this approach frequently leads to superficial solutions that don’t address root causes. Instead, at Equals we invest time in building a comprehensive mental model of the problem and related systems.

If we don’t have a complete understanding, we make changes that do not address the root cause of the issue, or end up modeling the solution incorrectly. This results in further issues; edge cases we didn’t expect, new symptoms of the same underlying problem. By taking the time to understand the issue, our systems evolve in a better direction over time, building elegant and maintainable solutions with fewer side effects and bugs.

Understand the system as designed

Before making changes, we strive to understand the original intention behind the existing code:

What specific problem was the code trying to solve?

What was the intended technical design and architecture?

What product constraints or assumptions influenced the current implementation? Were they correct?

The need to do this speaks to the importance of clear documentation; at Equals we favor writing down our plans, and committing our investigations into words stored in our ticketing / work log system.

In understanding the intention behind code, we can question whether those intentions are correct today for the change we want to make. Perhaps we have learnt more about the problem, or we were just wrong in an assumption to begin with. We embrace changing decisions when we have more information.

Understand the actual system

Next, we strive to understand the data modeling in our current system. If our data modeling does not match the problem, then there’s always conflict in our system as built vs the system as desired.

Again, extensive writing as we explore a system is crucial, and having a whiteboard to scribble our abstract understanding on to is very handy.

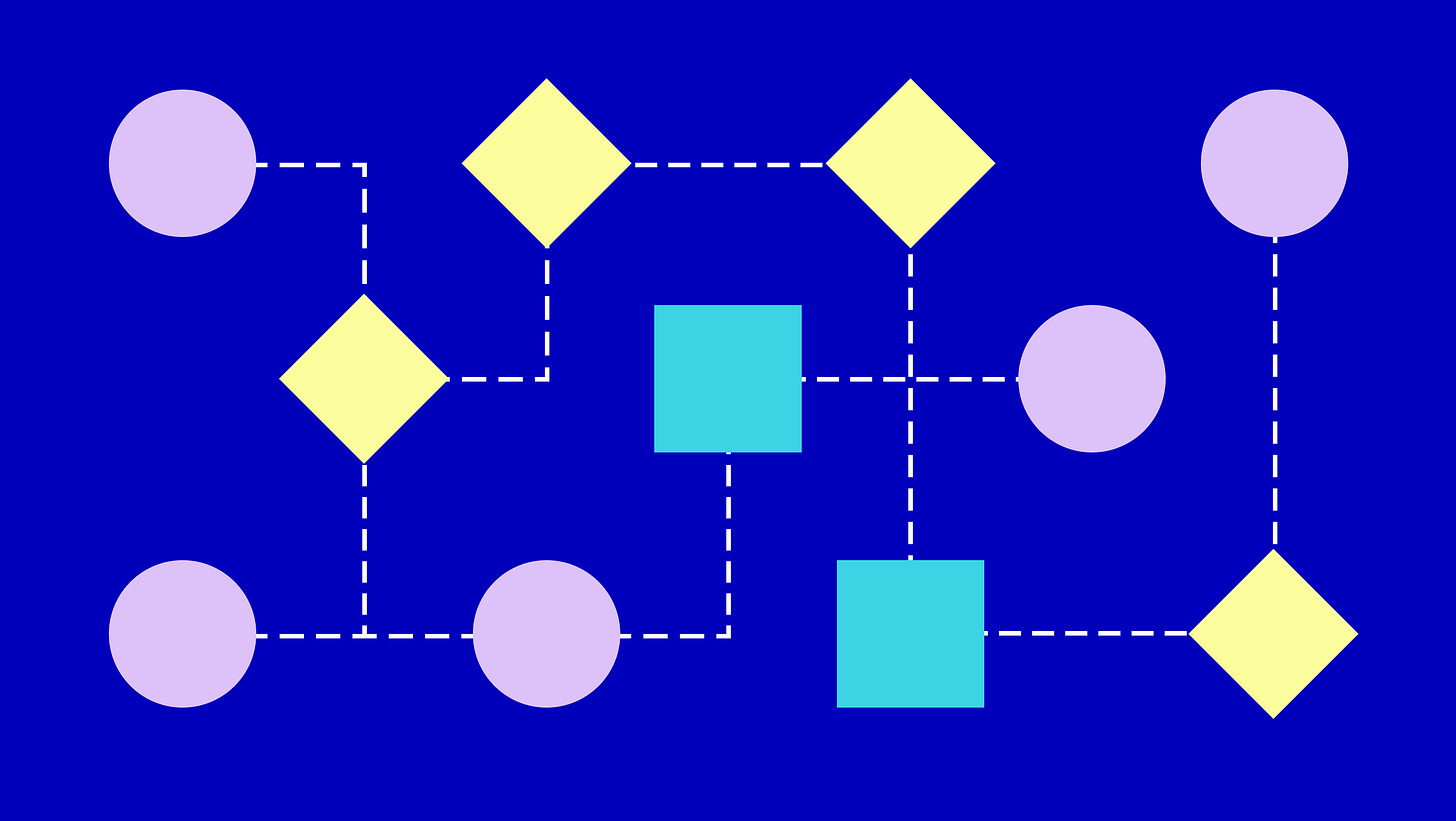

One technique I find particularly handy is to search for any conditional statements, and plot those as points on my whiteboard flow diagram. What does the data look like each time we branch?

We ask these questions:

What are the core entities?

How are these entities related?

Does our current data model accurately represent these entities and relationships?

Are there inconsistencies or redundancies in our data model?

How does data and control flow through the system?

What are the key algorithms and data structures?

How do different components interact?

Are there discrepancies between the intended design and the actual implementation?

We also need to understand how the system operates while running; this involves thinking about how state moves through the system and is updated. This is hard to capture - our brains only have a certain size of context window.

System observability is key to this. We invest in traditional observability mechanisms (events, metrics, and logs), but also in tooling for hands-on debugging of particular issues. Spreadsheet workbooks can be constructed in ~infinite different forms, and being able to explore the state of a live spreadsheet is incredibly important.